If you’ve heard of RAG in AI but not sure what it does, check out this explainer we wrote for you. We talk about how it works, where it fits in the AI stack, and why it’s a big deal for enterprise use cases.

Published 06 Aug 2025

Why Retrieval Augmented Generation (RAG) is the Future of Contextual AI

In recent times, large language models (LLMs) have seen significant advancements that have fundamentally revolutionised how we use them. It has opened up new possibilities in the field of Artificial Intelligence (AI).

However, right from the beginning, LLMs have faced this peculiar problem of lacking contextual accuracy and data freshness. This is where Retrieval Augmented Generation (RAG) comes into play, enhancing contextual understanding and enabling LLMs to generate context-aware responses.

This blog explores the concept of RAG-based systems, the technologies that power them, and their role in developing next-generation contextual AI applications.

What is a RAG system?

Retrieval Augmented Generation (RAG) systems integrate the capabilities of generative AI with retrieval-based context enrichment, which significantly enhances the accuracy and relevance of the generated responses.

In simpler terms, RAG is a way to make AI smarter by combining its ability to respond with real information from trusted sources.

A RAG is powered by these two components:

- Retrieval: The system first uses embeddings stored in a vector database to perform a semantic search to identify and retrieve the most relevant content as per the user query.

- Generation: The retrieved documents serve as a contextual input for the large language model, which then produces contextually aware and accurate responses.

This integration between retrieval and generation solves critical generative AI challenges like hallucinations and outdated information, as RAG dynamically supplies updated and precise context. The ability to reference near-real-time and domain-specific data dramatically boosts reliability and user trust in AI systems.

For example, in medical applications, a RAG-based chatbot can swiftly retrieve accurate medical literature and patient histories, ensuring responses that are both accurate and context-aware.

What powers a RAG system?

A RAG system is powered by vectors. In the world of machine learning, a vector represents data points as numerical coordinates within a multidimensional space.

Vectors are represented using embeddings, which translate textual, visual, and audio data into dense numerical vectors. These vectors excel at capturing the semantic meaning and relationships between entities, which in turn allows AI systems to quantify similarity and context accurately.

Embeddings are typically generated using neural network models that are trained to capture semantic relationships from large datasets. Techniques such as Word2Vec, GloVe, FastText, and transformer-based models like BERT, RoBERTa, and SentenceTransformers produce embeddings that encode the semantic nuances of language into high-dimensional numeric vectors.

As mentioned earlier, a critical aspect of embeddings is their ability to represent context.

For instance, transformer-based models leverage attention mechanisms to produce context-aware embeddings, dynamically adjusting representations based on surrounding words. Consequently, the embeddings of homonyms such as "bank" (financial institution) and "bank" (river edge) differ based on context.

RAG use cases like semantic search, clustering, and recommendation systems are powered by similarity metrics such as cosine similarity and Euclidean distance, which measure how closely related two embeddings are within a vector space.

Sentences or paragraphs that convey similar semantic meanings are clustered together, which in turn enables an effective and accurate retrieval of semantically relevant documents. For example, words like “dog” and “canine” would appear close within the vector space, reflecting their semantic relationship.

Let’s take a look at an example of two semantically close words - car and automobile and see how their vector embeddings and similarity scores are calculated.

A simple 5-dimensional embedding might look like:

- Car = [0.9, 0.1, 0.3, 0.7, 0.5]

- Automobile: [0.85, 0.15, 0.25, 0.65, 0.55]

The numeric closeness of these embeddings reflects their semantic similarity.

Now, let's compute the cosine similarity between these two embeddings:

Cosine Similarity (A, B) = (A · B) / (||A|| × ||B||)

First, calculate the dot product:

(0.9 × 0.85) + (0.1 × 0.15) + (0.3 × 0.25) + (0.7 × 0.65) + (0.5 × 0.55)

= 0.765 + 0.015 + 0.075 + 0.455 + 0.275

= 1.585

Next, calculate their magnitudes:

Magnitude of Car vector

= sqrt(0.9² + 0.1² + 0.3² + 0.7² + 0.5²)

= sqrt(0.81 + 0.01 + 0.09 + 0.49 + 0.25)

= sqrt(1.65) ≈ 1.2845

Magnitude of Automobile vector

= sqrt(0.85² + 0.15² + 0.25² + 0.65² + 0.55²)

= sqrt(0.7225 + 0.0225 + 0.0625 + 0.4225 + 0.3025)

= sqrt(1.5325) ≈ 1.2380

Finally, calculate the cosine similarity:

Cosine Similarity (Car, Automobile)

= 1.585 / (1.2845 × 1.2380)

≈ 1.585 / 1.5905

≈ 0.9965

A cosine similarity close to 1 indicates a high semantic similarity between "car" and "automobile" in the vector space.

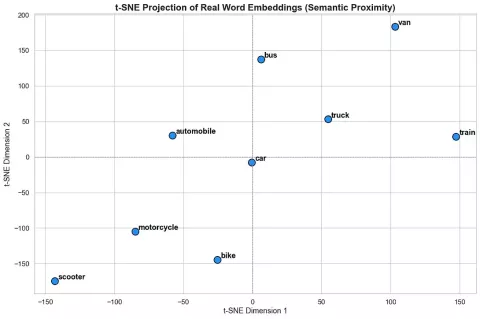

Here’s a visual representation of how semantically similar words appear in vector space.

from sentence_transformers import SentenceTransformer

from sklearn.manifold import TSNE

from sklearn.preprocessing import normalize

import seaborn as sns

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

# Load model and words

model = SentenceTransformer('all-MiniLM-L6-v2')

words = [

"car", "automobile", "truck", "bike", "bus",

"motorcycle", "scooter", "train", "van"

]

# Get and normalize embeddings

embeddings = model.encode(words)

embeddings = normalize(embeddings)

# Apply t-SNE

tsne = TSNE(n_components=2, perplexity=5, init='pca', learning_rate='auto', max_iter=1000, random_state=42)

reduced_embeddings = tsne.fit_transform(embeddings)

# Center coordinates around (0, 0)

x_centered = reduced_embeddings[:, 0] - np.mean(reduced_embeddings[:, 0])

y_centered = reduced_embeddings[:, 1] - np.mean(reduced_embeddings[:, 1])

# Create DataFrame for Seaborn

df = pd.DataFrame({

'x': x_centered,

'y': y_centered,

'label': words

})

# Set Seaborn style

sns.set(style="whitegrid", font_scale=1.1)

# Create figure and axes

plt.figure(figsize=(12, 8))

ax = sns.scatterplot(data=df, x='x', y='y', s=150, color='dodgerblue', edgecolor='black', linewidth=1.2)

# Draw quadrant lines

plt.axhline(0, color='gray', linestyle='--', linewidth=0.8)

plt.axvline(0, color='gray', linestyle='--', linewidth=0.8)

# Annotate each point

for i in range(len(df)):

ax.text(df['x'][i] + 2.5, df['y'][i] + 2.5, df['label'][i],

horizontalalignment='left', size='medium', color='black', weight='semibold')

# Final polish

plt.title('t-SNE Projection of Real Word Embeddings (Semantic Proximity)', fontsize=16, weight='bold')

plt.xlabel('t-SNE Dimension 1')

plt.ylabel('t-SNE Dimension 2')

plt.tight_layout()

plt.show()You can copy and run the above code to build the chart below.

Where do I store the vectors?

Traditional databases are primarily structured around exact-match queries and relational schemas and hence struggle with the approximate similarity searches required by vector embeddings. Vectors are stored in vector databases, which are specialised database systems optimised for storing, indexing, and rapidly retrieving vector data.

Vector databases have an edge over traditional database systems as they use advanced indexing and searching techniques such as Approximate Nearest Neighbor (ANN) search, allowing quick retrieval of semantically similar documents. This capability enables them to power applications that rely heavily on semantic context, such as recommendation engines, semantic search, and intelligent conversational agents.

Popular vector databases include:

- FAISS (Facebook AI Similarity Search): Renowned for performance, speed, and scalability, especially suitable for local or high-performance server deployments.

- Pinecone: A fully managed, cloud-native vector database that simplifies deployment, scaling, and integration.

- Weaviate: An open-source, schema-based vector database supporting hybrid search capabilities.

- Qdrant and Chroma: Developer-friendly open-source solutions ideal for prototyping and rapid deployment.

Practical use cases of RAG

The impact of RAG systems is pretty clear when you see how different industries are using them.

- Conversational AI: Chatbots benefit the most from using RAG systems. They can give more accurate, relevant answers in real time, especially in customer support and technical assistance.

- Content and Product Recommendations: User experiences can be personalised by matching their interests with semantically relevant content.

- Enterprise Knowledge Bases: Need answers fast? RAG helps internal teams pull up the right information from huge piles of corporate documents, significantly improving productivity.

- Semantic Search Engines: Search engines get smarter with RAG. Instead of just matching keywords, they understand meaning (semantically) so you get relevant and accurate results every time.

Are there any challenges to watch out for with RAG systems?

Yes! Despite all the strengths of RAG systems that we have discussed throughout the article, there are a few things to watch out for to keep things running smoothly and up-to-date.

- Scalability: With new data being generated day by day, it is important to address scalability by leveraging advanced optimisation and deployment strategies.

- Data Freshness: Vector embeddings must be updated in a timely manner on a regular basis to ensure retrieval is done on fresh data.

- Embedding Drift: Embedding drift is real and can deteriorate retrieval accuracy over time if not monitored and managed properly.

RAG combines creativity (of generative models) and accuracy (of real-time retrieval) to offer smarter and more grounded AI. And no, it's not just a trend. It’s a powerful and practical upgrade to how AI systems understand and respond to systems. If you're working on anything that involves language, knowledge, or decision-making, RAG is worth paying attention to.

Want to see how RAG could work for your business? We can help you build, test, and deploy your own RAG-powered solution. Let’s talk!